The future of human interaction with machines

EMOTIONS MATTER. WE know it intuitively, but our machines don’t. They do not know how we feel; they have no idea as to what we mean, and they are clueless in deciphering if a quickly uttered “have a nice day” actually is genuine. This emotional blind-spot is not due to a lack of trying. As a matter of fact, researchers and computer scientists have been trying for decades to endow machines with these unalienable qualities we humans take for granted.

In the past 10 years, there have been great breakthroughs in the area of voice synthesis (now even with human-sounding inflections, e.g. a rising tonality at the end of sentences where appropriate), speech recognition, and text-to-speech. But here too, the focus has been merely on words, as speech recognition is a bit like the reverse of text-to-speech — the computer hears what you’re saying, and converts it into text. Nevertheless, the computer does not register the tremble in your voice, the enthusiasm in your inflection, the sorrow reflected in your modulation, or the assertiveness in which you guide your vocal intonation.

Read Also : How social media functions in the growth of business

Researchers and Computer Scientist have been trying for decades to endow machines with these unalienable qualities we humans take for granted. They might have found the way. They are studying how people interact with computers and to what extent computers are or are not developed for successful interaction with human beings. They started working on a new field called “Emotions Analytics”. It is a field that focuses on identifying and analysing the full spectrum of human emotions including mood, attitude and emotional personality. Now imagine Emotions Analytics embedded inside your mobile applications and devices, opening up a new dimension of human-machine interactions. Think of an “emotional analytics engine” that takes a 20-second snippet of your spoken vocal intonation and nearly simultaneously offers you a succinct analysis of the underlying emotional communication via a dashboard.

One such initial example is Moodies, which asks individuals to speak their minds, and then analyses the speech into primary and secondary moods, the latter usually pertaining to a subliminally underlying emotional state. Since the app analyses vocal cues and intonations, not the content of your words, the software can, at present analyse any language (it was tested on more than 30).

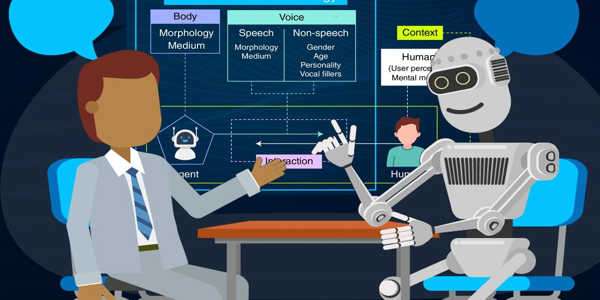

One important Human-Computer Interaction factor is that different users form different conceptions or mental models about their interactions and have different ways of learning and keeping knowledge and skills (different “cognitive styles” as in, for example, “left-brained” and “right-brained” people). In addition, cultural and national differences play a part. Another consideration in studying or designing Human-Computer Interaction is that user interface technology changes rapidly, offering new interaction possibilities to which previous research findings may not apply. Finally, user preferences change as they gradually master new interfaces.

The future of human interaction with machines is likely to be characterized by increasingly seamless and natural interactions. Advances in artificial intelligence (AI), machine learning, and natural language processing (NLP) are making it possible for machines to understand and respond to human input in more sophisticated ways. This has the potential to transform many aspects of our lives, from work and entertainment to healthcare and education.

One trend that is likely to continue is the growing use of voice interfaces, such as virtual assistants like Amazon’s Alexa, Google Assistant, or Apple’s Siri. These systems are becoming more intelligent and intuitive, allowing people to interact with them in more natural ways, using conversational language instead of rigid commands. In the future, voice interfaces may become even more pervasive, integrated into everyday objects and environments.

Another trend is the development of more advanced haptic interfaces, which allow people to interact with machines through touch and movement. This could include technologies such as augmented reality and virtual reality, which provide immersive experiences that feel more natural than traditional computer interfaces.

As machines become more sophisticated, they may also become more autonomous, making decisions and taking actions on their own without human intervention. This could have profound implications for industries such as transportation and manufacturing, where autonomous systems could revolutionize the way things are done.

Overall, the future of human interaction with machines is likely to be characterized by ever-increasing levels of integration and sophistication, blurring the lines between human and machine intelligence.

In addition to voice and haptic interfaces, other types of interfaces are also being developed, such as brain-computer interfaces (BCIs). BCIs allow people to control machines using their thoughts, potentially enabling new forms of communication and control for people with disabilities.

As machines become more intelligent and autonomous, concerns about their impact on society are also growing. Some experts worry that the rise of automation and AI could lead to job displacement and economic inequality. There are also concerns about privacy and security, as machines collect and process vast amounts of personal data.

To address these concerns, researchers and policymakers are working to develop ethical guidelines for the development and use of AI and autonomous systems. They are also exploring ways to ensure that the benefits of these technologies are shared equitably across society.

Ultimately, the future of human interaction with machines will be shaped by a combination of technological progress and social and ethical considerations. As machines become more intelligent and integrated into our lives, it will be important to ensure that they are used in ways that benefit everyone and that human values and perspectives are taken into account.

One area where the future of human interaction with machines is particularly exciting is in the field of robotics. As robots become more advanced, they have the potential to take on tasks that are too dangerous or difficult for humans to perform. For example, robots can be used to explore space or to perform complex surgical procedures with greater precision than is possible with human hands.

Advances in machine learning are also enabling machines to learn from their interactions with humans and improve their performance over time. This could lead to machines that can adapt to a wide range of situations and perform tasks more efficiently than ever before.

Another important trend is the increasing use of machine-to-machine (M2M) communication, where machines communicate with each other to perform tasks more efficiently. For example, in a smart factory, machines can communicate with each other to optimize production processes and reduce waste.

As the use of machines becomes more widespread, it will also become increasingly important to design machines that are user-friendly and easy to understand. This will require collaboration between designers, engineers, and social scientists to ensure that machines are developed in a way that is both technically advanced and socially responsible.

In the end, the future of human interaction with machines will be shaped by a wide range of factors, including technological progress, societal values, and economic considerations. As these technologies continue to evolve, it will be important to ensure that they are used in ways that benefit society as a whole and promote human flourishing.

One key challenge in the future of human interaction with machines is the development of ethical frameworks and regulations that can keep pace with rapidly evolving technologies. The rise of AI and automation has raised concerns about issues such as bias, discrimination, and privacy, and there is a growing need to establish guidelines that ensure that these technologies are developed and used in a responsible and ethical manner.

In addition to ethical considerations, there are also technical challenges that must be overcome. One key issue is the need for more powerful and energy-efficient computing technologies, which are necessary to support the development of increasingly sophisticated AI and machine learning algorithms.

Another challenge is the need to develop more robust and secure systems to protect against cyber attacks and other security threats. As machines become more integrated into our lives, they will become increasingly attractive targets for hackers and other malicious actors, and it will be important to develop robust security protocols to protect against these threats.

Despite these challenges, the future of human interaction with machines is full of promise. By harnessing the power of AI and other advanced technologies, we can create machines that are more intelligent, efficient, and adaptable than ever before. These machines have the potential to transform many aspects of our lives, from healthcare and education to transportation and manufacturing, and to enable new forms of human-machine collaboration and interaction that we can only begin to imagine.

Another important consideration for the future of human interaction with machines is the impact that these technologies will have on social dynamics and human relationships. As machines become more intelligent and capable, they may take on increasingly complex roles in our lives, blurring the lines between human and machine interactions.

For example, robots and virtual assistants may become more common in the workplace, taking on tasks traditionally performed by human workers. This could lead to significant changes in the nature of work and employment, with implications for job security, wages, and social dynamics.

Similarly, as machines become more integrated into our personal lives, they may become more influential in shaping our behavior and decision-making processes. This could have both positive and negative effects, depending on how these technologies are designed and used.

As a result, it will be important to carefully consider the social and psychological impacts of these technologies, and to design them in a way that promotes human well-being and flourishing. This may require new approaches to education and training, as well as new models of governance and regulation to ensure that these technologies are used in a responsible and beneficial way.

Ultimately, the future of human interaction with machines is likely to be shaped by a complex interplay of technological, social, and cultural factors. By taking a proactive and collaborative approach to the development and deployment of these technologies, we can help to ensure that they are used in ways that benefit society as a whole, and that promote human flourishing and well-being.